In the journey of DevOps where we have covered Git, Linux, and Docker now let's continue to learn new topic i.e. Kubernetes.

You can check out my previous blogs here - Recap of my blogs

Kubernetes — also known as “k8s”('k' 8 characters 's') or “kube” — is a container orchestration platform for scheduling and automating the deployment, management, and scaling of containerized applications.

Kubernetes is particularly useful for DevOps teams since it offers service discovery, load balancing within the cluster, automated rollouts and rollbacks, self-healing of containers that fail, and configuration management. Plus, Kubernetes is a critical tool for building robust DevOps CI/CD pipelines.

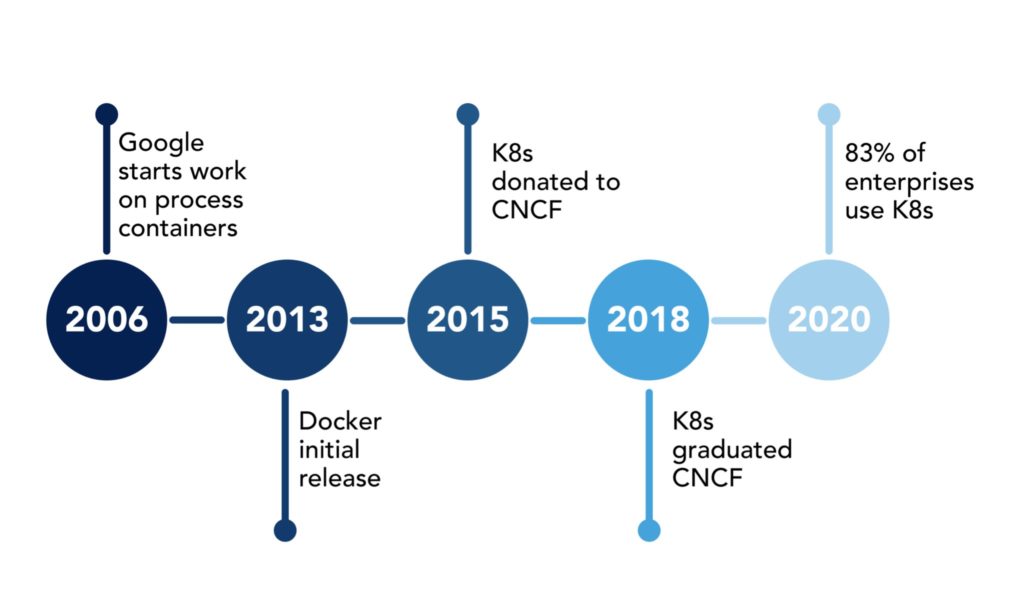

History of Kubernetes

Kubernetes has its roots in Google’s internal Borg System, introduced between 2003 and 2004. Later, in 2013, Google released another project known as Omega, a flexible, scalable scheduler for large compute clusters. In that same year, McLuckie, Beda, and Burns set out to develop a “minimally viable orchestrator.”

Kubernetes was first developed by engineers at Google before being open-sourced in 2014. It is a descendant of Borg, a container orchestration platform used internally at Google.

Kubernetes is Greek for helmsman or pilot, hence the helm in the Kubernetes logo

Features of Kubernetes

Numerous features of Kubernetes make it possible to manage K8s clusters automatically, orchestrate containers across different hosts, and optimize resource usage by making better use of infrastructure.

Some main features are:

Auto-scaling - Automatically adjust the resources and utilization of containerized apps.

Resilience and Self-healing - Application self-healing is provided through auto-placement, auto-restart, auto-replication, and auto-scaling.

Persistent Storage - The capacity to dynamically mount and add storage.

Architecture of Kubernetes

The first and foremost thing you should understand about Kubernetes is, that it is a distributed system. Meaning, it has multiple components spread across different servers over a network. These servers could be Virtual machines or bare metal servers. We call it a Kubernetes cluster.

A Kubernetes cluster consists of control plane nodes or the master nodes and worker nodes.

Control Plane

The control plane is responsible for container orchestration and maintaining the desired state of the cluster. It has the following components.

kube-apiserver

kube-scheduler

etcd

kube-controller-manager

cloud-controller-manager

Worker Node

The Worker nodes are responsible for running containerized applications. The worker Node has the following components.

kubelet

kube-proxy

Container runtime

Use Cases of Docker and Kubernetes

Deploying and managing microservices applications

Dynamic scaling

Running containerized applications on edge devices

Continuous integration and continuous delivery (CI/CD)

Docker vs Kubernetes

| Docker | Kubernetes |

| Creating, deploying, and running individual containers. | Orchestration and management of containers across clusters of machines. |

| Primarily focused on building and running containers; scaling is typically handled externally. | Manages deployment, scaling, load balancing, and self-healing of containerized applications. |

| Containerization platform for creating, packaging, and running applications in containers. | Container orchestration platform for managing and scaling containerized applications. |

So in this blog, we understood some basic functionalities of a new topic i.e. Kubernetes.

In the next blog, we will learn the installation process of Kubernetes on our local machine (UBUNTU) and some basic operations.

Stay tuned!! Happy Learning 🚀